IstoVisio · B2B · Voice · AI

Redefined Task Execution with an AI Voice Interface

Voice Interface

IstoVisio · January 2025

Users: Scientists

An AI-powered voice command system that turns spoken instructions into system actions, reducing the need to navigate complex dashboards.

My role: Founding Product Designer

What I owned: End-to-end product design lead (0–1).Problem framing, interaction model, voice UX, guardrails, and prototype validation in collaboration with engineering.

PROBLEM

Scientists know exactly what they want to do, but completing the action requires navigating multiple panels and repeating configuration steps. Over time, this friction disrupts focus and increases the risk of mistakes during complex analyses.

IDENTIFYING WORKFLOW BOTTLENECKS

To identify workflow friction, I spoke with eight scientists across Europe and the US and observed how they worked inside the platform.Three patterns emerged:

Getting to the right view took too many steps

Scientists knew exactly what they wanted to see, but reaching that view required navigating multiple panels and repeating the same setup process.

WHAT THIS MEANT

Small adjustments constantly interrupted the flow

Toggling layers, switching visibility, and checking different configurations were quick actions, but they broke concentration when done repeatedly.

Frequently used settings were buried in menus

Even experienced users had to dig through settings to access controls they relied on daily, interrupting their focus and slowing expert workflows.

Scientists didn’t need more features.

They needed a faster way to act on what they already knew.

Voice wasn’t about replacing the interface.

It was about shortening the distance between intent and execution.

DEFINING THE VOICE COMMAND EXPERIENCE

Designing voice for a scientific tool required a different approach. I mapped the interaction from spoken command to system response to ensure accuracy where it mattered most.

REMOVING BARRIERS TO ADOPTION

Speech Variability

Researchers speak differently across accents and phrasing.

Design response

• Multiple command variations

• Flexible phrase recognition

• Recovery prompts

Introducing voice commands changes how scientists interact with the system. For the feature to succeed, users must trust it. I identified the key barriers to adoption and designed the system to address them.

Learnability

Users cannot memorize voice commands.

Design response

• Categorized commands

• On-demand command menu

• Tutorials

Workflow Integration

Researchers rely on existing interaction patterns.

Design response

• Hybrid voice + menu model

• Voice as an optional shortcut

SHAPING THE SYSTEM IN PRACTICE

Designing the interaction model was only part of the work. As the system moved closer to real use, several constraints began shaping how voice could actually behave in the product.

Speech varied widely between users, commands needed to be easy to learn, and mistakes had to be recoverable without breaking the workflow. Addressing these constraints ultimately defined how the voice system was structured.

Supporting diverse speech patterns

To accommodate different accents and levels of English fluency, the system supports multiple natural variations for each command. Instead of forcing users to memorize a single phrase, the model recognizes several ways of expressing the same intent.

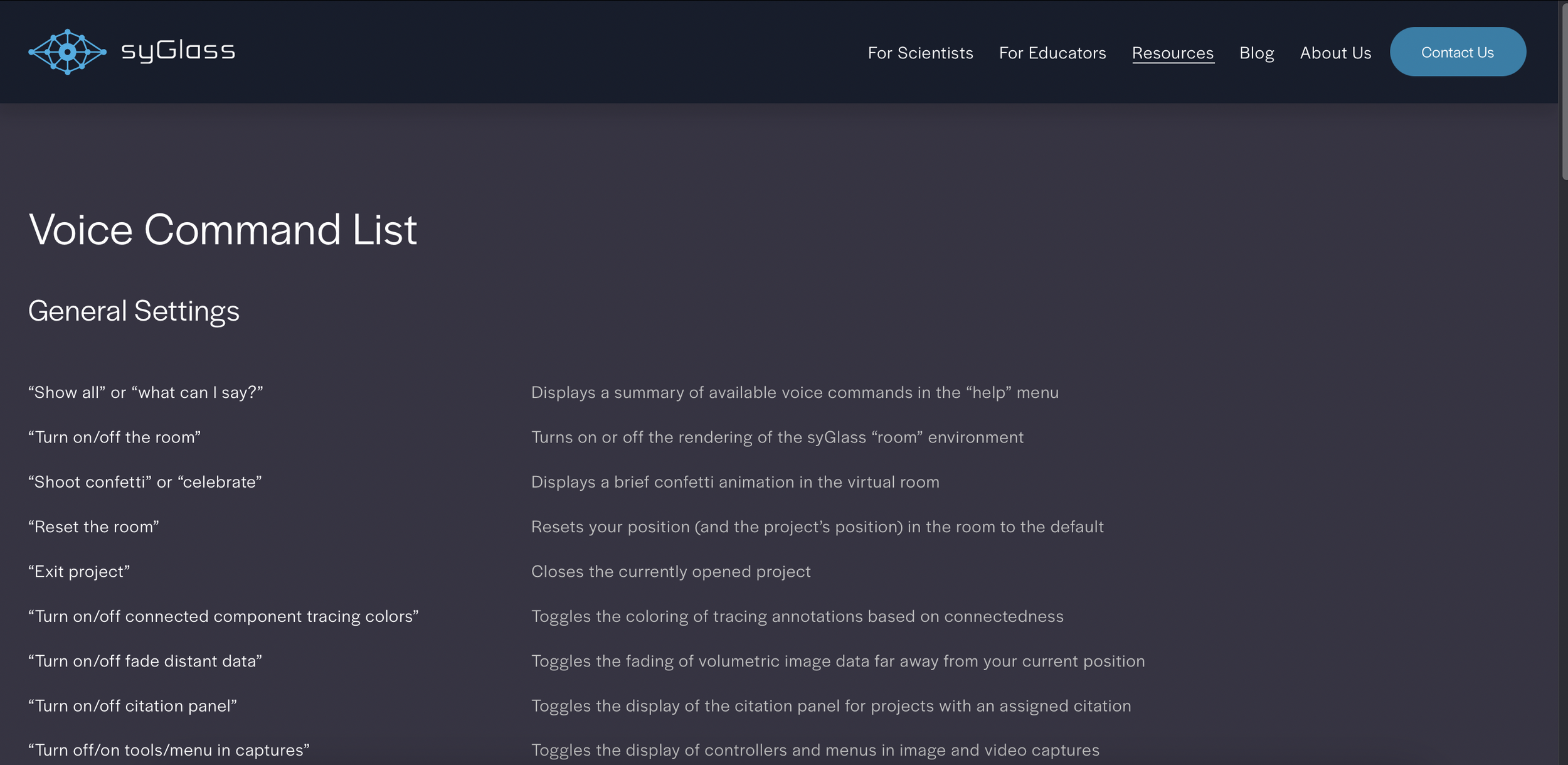

Making commands easy to learn

Pic. 1 In-VR Guide – Quick command lookup

Commands were organized into a predictable structure such as toggles and sliders. Tutorials and documentation helped introduce the feature, while the structure itself reduced the need for memorization.

Pic. 3 Web Documentation – Detailed guide for advanced use

Pic. 2 Tutorial – helps users learn commands quickly

Designing for error recovery

Visual feedback when the system is listening, so users know their voice is being detected and don’t repeat commands unnecessarily.

Voice interactions rarely work perfectly the first time. I mapped the command structure into components such as activation command, command name, and slot value. This allowed the system to recognize partial inputs and suggest possible completions when a phrase was only partially understood.

Clear prompts when the system doesn’t understand (e.g., “I didn’t understand that. Try saying ‘Decrease opacity’ or ‘What can I say’”) helped users recover quickly and stay confident using voice commands.

FINAL DESIGN

IMPACT

*Data Data collected via a post-launch survey

Voice commands reduced the time required to complete common workflows by 41%, significantly lowering friction for expert users.

REFLECTION

This project changed how I think about “accuracy” in AI interfaces. Users do not need perfection, but they do need a system that behaves predictably when it is wrong.

The real design work was setting expectations, making the system’s understanding visible, and giving users a fast way to correct course. In practice, adoption depended less on recognition quality and more on whether the interface made users feel in control.